Among the growing number of open standards used in computer graphics, it seems to lack a one for procedural mesh processing interoperability.

I recently started writing such a standard, called Open Mesh Effect, as I needed it to bridge Houdini Engine to Blender without violating the terms of the protective GPL license. At this point of the draft, I really need other people, with other expertises and interests, to come aboard and give some feedback. This post sketches the context within which Open Mesh Effect has been thought, and how I see its goals at short and medium terms.

Contents:

A bit of history

There is a long history of file formats and standards used in the computer graphics industry, and it has been evolving with a shift from closed formats imposed by the software editors to open formats proposed by the studios. The latter might have been fed up with writing dirty conversion scripts for each new tool in their pipeline and waiting for the next discontinuation announcement to start over. Rest in peace Softimage.

Largest studios were growing, artists were specializing, software as well. Studios started to make independent choices for the different bricks of their pipeline: modeling suite, rigging/animation, surfacing/rendering, compositing. And for these choices to be truly independent, they needed exchange formats to be adopted by software vendors.

Thus, in the early 2000s, the first open standards started to emerge. In 2003, Sony Imageworks releases OpenColorIO for color management, and Industrial Light & Magic publishes OpenEXR, an image file format dedicated to the communication from render engines to compositing softwares. A year later, Sony Computer Entertainment presents COLLADA for complex 3D assets and Foundry announces OpenFX for compositing plug-ins.

A second wave of open standards arose in the 2010s. Sony and ILM collaborated again to develop the Alembic cache format in 2011 to replace the good old OBJ files from the early 90s. This was limited to surfaces, so DreamWorks Animation created OpenVDB (2013) to handle volumetric caches. These two formats are now used everywhere, but they are meant for caching. For more structured scene data, Pixar presented USD (Universal Scene Description) in 2014.

USD is a large standards that intend to cover many steps of the pipeline, so it takes more time to adopt, but it is definitely at the center of interests of many actors, like SideFX, Unity, Blender, etc.

On the surfacing side, the standard that appeared is not a file format, because lighting models are still changing a lot, and can be very different for specific materials. It is rather a Domain Specific Language called Open Shading Language (Sony Pictures Imageworks, 2009) and is now well integrated into many render engines.

Missing Standard

So, at this point, it feels that we have open standards for everything. And the dynamic of the whole industry benefits from such win-win compromises. It fosters shared innovation, attracts developers by giving them something more interesting to do than "hey, could you re-implement this tool our concurrent has", etc.

Everybody can agree on this, which is why there are Standard Development Organisations like The Khronos Group (OpenGL, Vulkan, COLLADA, glTF), The Open Effects Association (OpenFX), and more recently the ASWF. (Whose initiative is this by the way? Did the Academy want to take over this, or did the companies gathered and asked the Academy to frame it?)

But do we really have standards for everything? What is still painful to transfer, what breaks every time we switch from one software to another one? Non-destructive operations on meshes are a true example. They are a thing. Hards-op, subdivision, displacement, everybody uses it, and everybody likes it. Yet, they remain impossible to share among softwares. When exporting a scene, one must either drop them or bake them.

These procedural mesh effects have been around for a while, under different names, but end up all being kind of the same. They are called modifiers in Blender and 3DS MAX, deformers in Cinema 4D. In Houdini or Maya, they are more generic nodes. Think of a twist effect for instance. All modeling software implement these, but no exchange format supports it.

There is an understandable reason for this. There can be a virtually infinite number of procedural effects. So a format that would cover all possible mesh effects does not make sense, or would require to write/share so much code that nobody would implement it. But there is a solution, that has been adopted by compositing: shared plug-ins. This is the point of OpenFX. The exchange file format just references the plug-ins to load, and the very same piece of procedural effect code is used in any software.

In a way, it is already what happens for some of the most common effects. Think of subdivision surface for instance. All major 3D modeling softwares use the very same implementation of subdivision, called OpenSubdiv (Pixar, 2013). This now allows formats, like USD, to agree on the meaning of subdivision parameters.

NB: Although USD mentions the notion of non-destructive operation, it only relates to parameter overrides, and the documentation clearly states that USD is "Not an execution system", which means that even though it can do a lot, it does not target things like procedural mesh effects.

Houdini Engine and Blender

Being leader in procedural modeling, SideFX noticed soon enough this need for a portable way of sharing parametrized assets. This was particularly needed for collaboration with game engines, to delay the baking of geometry up to the host program. So they isolated Houdini Engine from the rest of Houdini, an embeddable geometry baking (or "cooking" as they say) engine.

The programming interface of the Houdini Engine, called HAPI, is in a way already an API for procedural mesh effects. Kind of what we are looking for. But it is by no mean an open standard, and anyway has never been meant for interfacing with something else than Houdini. And remember that standards are better adopted when coming from users rather than from software vendors.

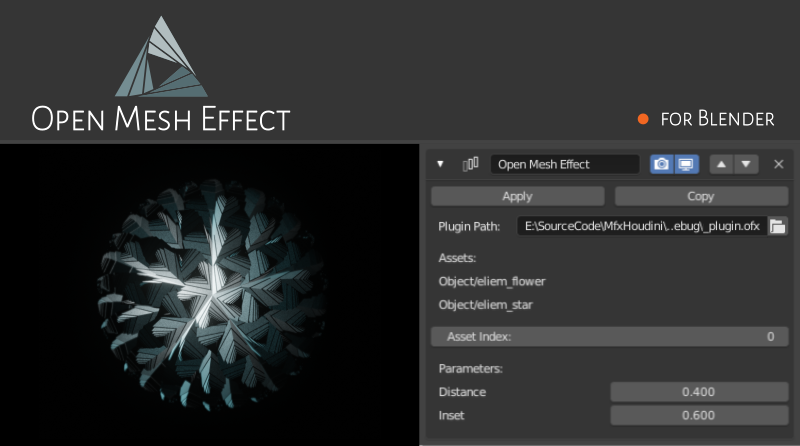

Anyways, considering the conceptual similarities between Blender's modifiers and Houdini geometry nodes, I started to work on an integration of Houdini Engine to Blender, so that any Houdini asset could become a Blender modifier. It worked, and the community clearly showed an interest for this.

#houdini modifier for #blender now with Float parameters! Only floats and OBJ assets for now, but enough for a first alpha - coming soon! pic.twitter.com/7o7wE44ECZ

— Élie Michel 🍪 (@exppad) July 4, 2019

Problem was: Blender must remain open source, and to ensure so is released under the GPL license. I respect this. Which mean it is not allowed to distribute a modified version of Blender if it cannot be fully open source itself. Bundling Blender with the Houdini Engine was hence not legally possible.

It thus became clearer that the standard we need is not the Houdini API, nor any other existing one, so I then started to draft Open Mesh Effect.

Open Mesh Effect

I could not resign myself to believing that something as useful as this Houdini Engine for Blender bridge was purely and simply not possible, so I started looking for examples of GPL licensed code interacting in a way with proprietary software. To my good surprise, the jurisprudence case was not far: Natron is a GPL software that can load closed source plug-ins.

This interaction of GPL and closed-source code is made possible by an open standard mentioned previously: OpenFX. And not only this is an interesting legal point, but also there are many common points between 2D image effects and 3D mesh effects. You might have noticed it if you are used to both Houdini and Nuke: nodes, links, parameters, curves, they are all the same, just with a slightly different interface.

The OpenFX standard has been well designed, and already isolates the ofxCore part, that only describes a generic plug-in mechanism, from the ofxImageEffect part, specific to the compositing. Even some elements of the image effect API could be used for mesh effects, including ofxParam and some of the design of mesh effect nodes. I hence designed ofxMeshEffect as an extension of OpenFX, reusing the very same plug-in mechanism.

Another example of common pattern between image effects and mesh effects is the notion of context of an OpenFX host. The OpenFX plug-in may run in fully nodal softwares like Nuke or Natron, but might also be used in less flexible software where effects are simply stacked, with one input and one output, like After Effects. Well, it is the very same than comparing Houdini's or Maya's nodes to 3DS MAX' or Blender's modifiers stack.

The Open Mesh Effect branch of Blender

My Blender-Houdini use case was a good challenge for this new draft. I wrote an Open Mesh Effect branch of Blender that behaves a bit like the original Houdini Engine for Blender branch but uses the Mesh Effect API rather than the HAPI. In a separate project (it is important not to distribute them together not to violate the GPL), I started an Open Mesh Effect plug-in that internally calls the Houdini Engine. The result is the same as the original project, except without the GPL violation, and with a new open standard.

Back to the #Houdini modifier for #Blender3d, this time without the #GPL licensing issue thanks to #OpenMeshEffect. A lot of clean up work before it can be released. pic.twitter.com/REh0rpBvpZ

— Élie Michel 🍪 (@exppad) July 15, 2019

Future prospects

So, before anything else, I need to finish the implementation of the current standard in Blender. At the moment, it is still a bit hacky, even though it works.

Then at least a second example of host would be needed, one that would support multiple inputs. I was thinking Maya or Houdini, they both provide a comfortable SDK. It should be quite straightforward, because I took care of isolating the Open Mesh Effect code in the Blender branch, so that is can be distributed with a more permissive license, hence allowing to reuse the code in Maya and Houdini. This piece of code is roughly the equivalent of OpenFX' "HostSupport".

That was for the host side, but most of the diversity of use cases will actually come from the plug-in side, and this is where we should take care of being as flexible as possible.

I would love to see a plug-in for programmable effects. Like Houdini's VEX node, but in Blender, or any other Open Mesh Effect host, and open source. Somebody suggested to have a look at ISPC, or design some other domain specific language. Or just clone VEX.

There is also a great amount of open source mesh processing effects in VCGlib, the core of MeshLab, which is known (and awarded) for being a good platform for sharing research work. Open Mesh Effect must be able to wrap it, otherwise we should review its design. Kitware's VTK might contain interesting algorithms too.

Once there are interesting plug-ins around, it becomes useful to introduce an USD schema for exchanging effects and their parameters.

Of course, there is also documentation, simple plug-in examples, test suites to help implementing new hosts.

Limitations

Beside all the unwanted limitations that might emerge when stress-testing the standard a bit, here are some that are already subject of concern.

The whole design is currently limited to polygonal meshes. This is a huge limitation, everything tends to show that polygonal meshes are only one model among many others, so alternate models like volumes, hierarchies, point clouds etc. should have their Open Mesh Effect counterpart.

Another key point on which I haven't given as much though as it deserves is memory management. There is a lot about this in the original Image Effect part of OpenFX, so I should have a deeper look at it. Basically we need ways to minimize the amount of memory allocation, which might require a very flexible memory layout, at the cost of plug-ins a bit more painful to write.

Current status of the standard

Ok, even though I've been calling it a "standard" all this post along, so far I am roughly the only one to have worked on it. This is a work in progress on which I really wish feedback, not to reinvent the wheel in my basement, and to balance everybody's interest.

So feel free to start working with it and keep in mind that anything might change, and those you are invited to suggest those changes!

For instance some key naming elements might change. For instance, at the moment, the same word "input" is used for both effect inputs and outputs, as they play a very symmetrical role. And even "Open Mesh Effect" may change, if we want to open to other representations than polygonal meshes.

People I'd love to hear feedback from

Anybodies feedback is useful, whether it is from users, developers, studios, whatever. But in particular, I'd be interested in the wise input from several people that necessarily have more experience than I do:

SideFX You are the first concerned by this, since Open Mesh Effect is to Houdini's SOPs what OpenFX is to Nuke's nodes. What feels like the pros and cons for you? Do you share my opinion that such an open API is worth of interest?

OpenFX When designing OpenFX, what were the hardest choices to make? Did some of you regret some parts of the design? What do you think about this use of the OpenFX core?

Foundry What is the balance sheet in the end of the release of OpenFX? Would you do it? Did you benefit enough from the ecosystem it created?

ASWF: The foundation is still young, but is there already some kind of working group to think about the current state of the ecosystem of standard, and what is missing? If so, were you working on something similar to Open Mesh Effect? How relevant to you think it is? I focused on OpenFX because for many reasons it felt like a good match, but from your perspective are there other open standards that could be a better fit to base Open Mesh Effect on? Do you provide Legal advice to prevent bad jokes like patent trolling when developing open standards?

Blender Do you agree that the approach I adopted to bind Houdini and Blender respects the spirit of the GPL? I did all of this Open Mesh Effect thing for you at first, and got almost no feedback. Would you a priori be ready to merge the Open Mesh Effect branch when it will be ready?

Plug-in writers People who try to follow the writing guide for plug-ins, or people who have experience with writing plug-ins for the original OpenFX Image Effects, what are the most painful steps? Are there things that you felt were simply impossible to carry on with the current design?

Others Once again, anybody else can give feedback, at @exppad on twitter for instance.

Happy siggraph to everybody!